Generative AI Vision

Note

“Generative AI Vision” is a 3rd-party example source provided and maintained by ChungYi Fu (Kaohsiung, Taiwan)

“Generative AI Vision” is a 3rd-party example source provided and maintained by ChungYi Fu (Kaohsiung, Taiwan)

Special thanks and credits to the efforts and contributions for all developers.

Special thanks and credits to the efforts and contributions for all developers.

Materials

AMB82-mini x 1

Example

In this example, we will be using Ameba Pro2 development board to capture and send image to various LLM servers for visual analysis and understanding.

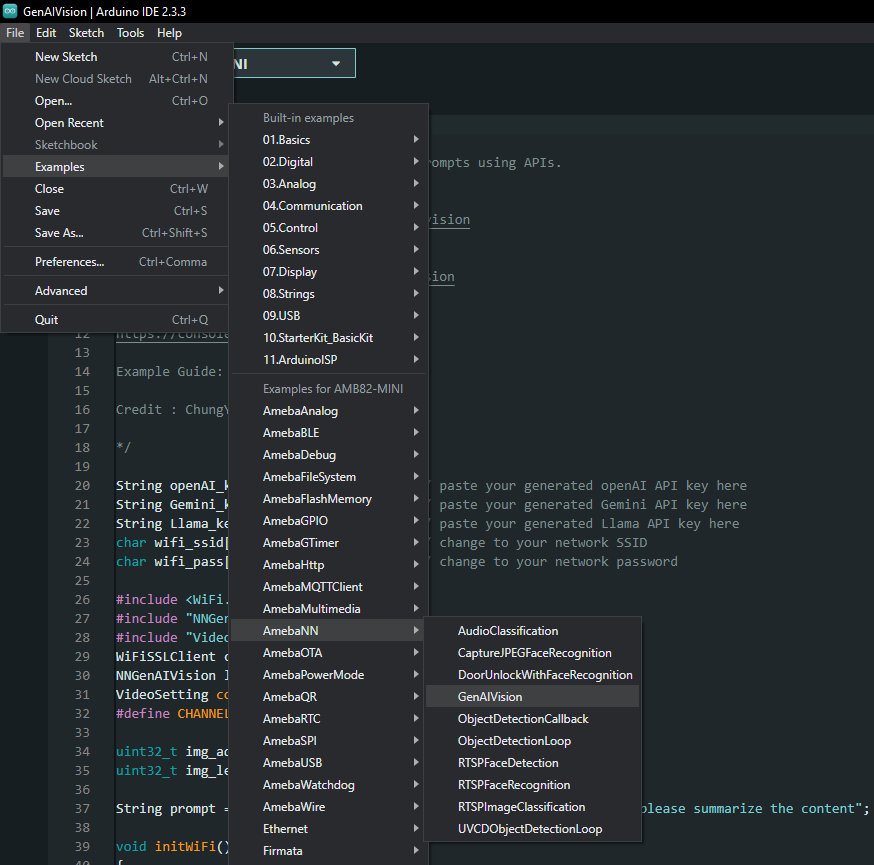

Open Generative AI Vision example in “File” -> “Examples” -> “AmebaNN” -> “GenAIVision”.

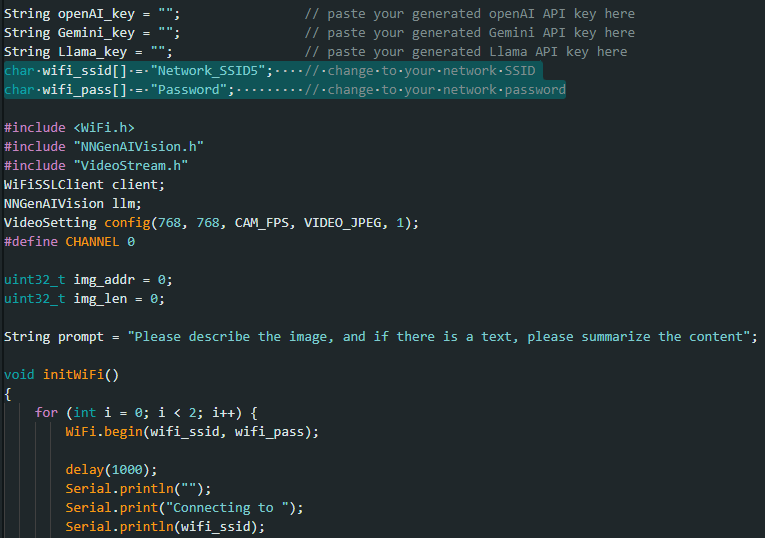

In the highlighted code snippet, fill in the “wifi_ssid” with your WiFi network SSID and “wifi_pass” with the network password.

In this documentation, we will be using Gemini API for demeonstration.

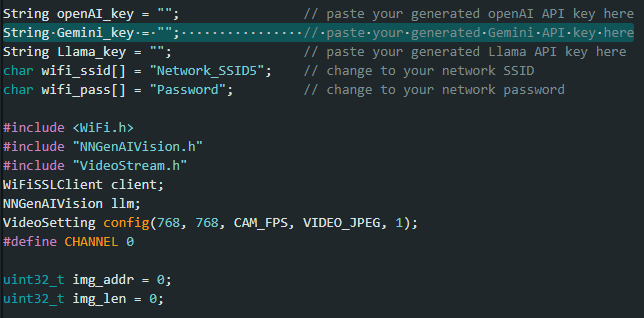

Create your own Gemini API key in Google AI Studio.

Copy your API key and paste in Gemini_key section.

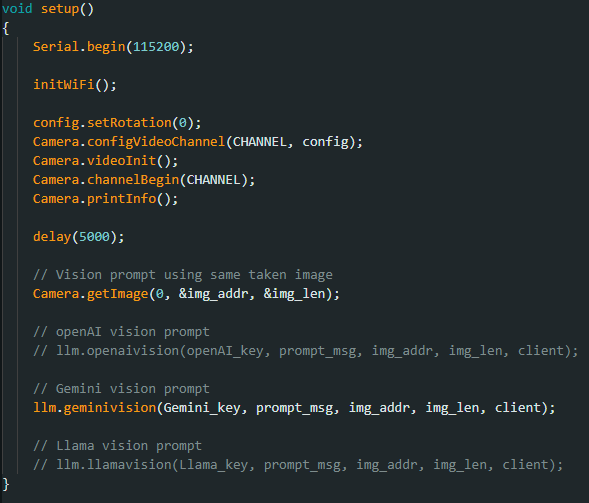

Uncomment the function of gemini vision prompt for execution.

Compile and upload the firmware to AMB82-mini. Open a serial monitor to view the response.

You may also modify the prompt_msg to suit your application needs.

Online LLM Models

Various online servers and LLM models featured in the SDK:

Server |

Model |

Rate Limit |

Pricing |

|---|---|---|---|

openAI platform |

gpt-4o-mini |

500 RPM |

Chargeable (Tier 1) |

Google AI Studio |

gemini-1.5-flash |

15 RPM |

Free of charge |

GroqCloud |

llama-3.2-90b-vision-preview |

15 RPM |

Free of charge |

Rate Limit References

openAI: https://platform.openai.com/docs/guides/rate-limits?context=tier-one#usage-tiers

Google AI Studio: https://ai.google.dev/pricing#1_5flash

GroqCloud: https://console.groq.com/settings/limits